Remember the Cadbury campaign that featured a hyper-localized version of Shah Rukh Khan, where the actor appeared to personally endorse small neighbourhood stores across India? The brand used generative AI to customize thousands of versions of the same ad at scale. Campaigns like this have pushed AI from the back office into the creative studio. Marketing teams now use AI to build digital avatars, generate multilingual content, and produce synthetic voiceovers and videos.

But authorities are concerned about potential misuse. In February 2026, the Government of India rolled out formal AI governance guidelines under the IndiaAI framework, signalling a structured approach to responsible AI deployment. Alongside this, enforcement continues under the IT Rules and the DPDP Act. The regulatory focus centres on deepfakes, impersonation, misleading AI-generated content, and the lawful processing of personal data. Rather than creating a standalone AI statute, the government has embedded accountability within existing legal mechanisms, making it clear that organizations using AI in advertising must build transparency, consent, and oversight into their workflows before content goes live.

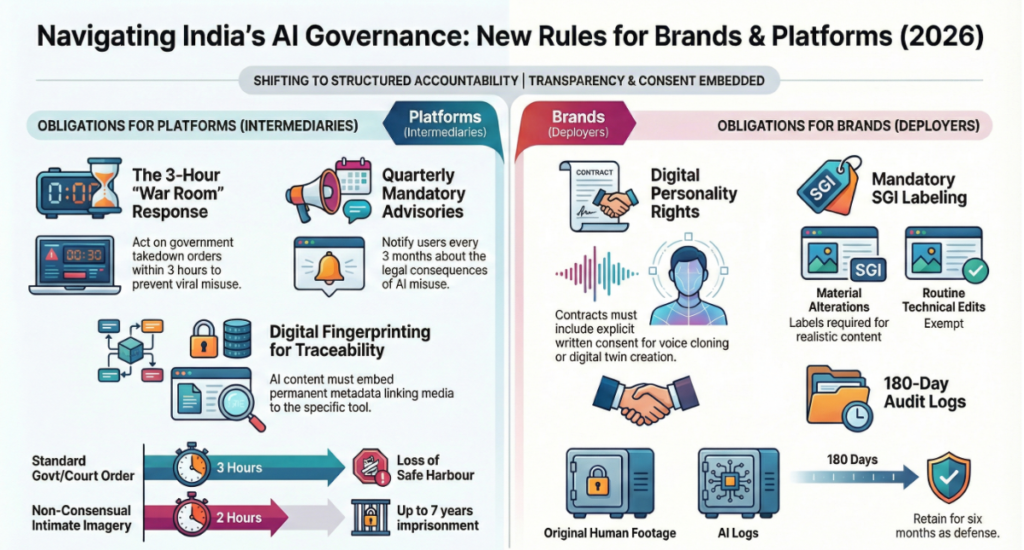

Importantly, the bulk of the new operational obligations fall on platforms that host user-generated content, such as social media companies, video-sharing platforms, messaging apps, marketplaces, and even company websites that allow public uploads or comments. These entities are treated in law as “intermediaries.” Brands that simply create and publish advertisements are not automatically classified as intermediaries.

The most stringent compliance requirements, such as rapid takedown windows, periodic user advisories, grievance officers, and safe-harbour protections, apply primarily to those hosting public content ecosystems.

The Spirit of India’s AI Governance Guidelines

The February 2026 guidelines under the India AI framework articulate a principle-led approach that emphasizes responsible development, safety, transparency, fairness, and human oversight. The government has signalled that AI systems deployed at scale, especially those influencing public opinion, consumer behaviour, or reputational outcomes, must operate within clearly defined ethical and legal boundaries.

Regulators are reinforcing a core expectation: organizations that benefit from AI must take ownership of its consequences. At the heart of the guidelines lies a principle-based framework described as the “Seven Sutras” of AI governance. Rather than prescribing rigid technical rules, the government has articulated foundational expectations. These require AI systems to be safe and reliable in operation; secure and resilient against misuse; fair and non-discriminatory in outcomes; respectful of privacy and personal data; transparent and explainable in their functioning; subject to meaningful human oversight; and governed through clear accountability mechanisms across their lifecycle.

What Platforms That Host Content Must Do

1. Mandatory Labelling Protocol

Companies that host user-generated content (such as social media platforms, video-sharing apps, or marketplaces where users upload content) must ensure AI-generated material is properly disclosed.

Synthetically Generated Information (SGI) that appears “real, authentic, or true” must be prominently labeled. For visuals, this means a “prominent and visible” disclosure like a watermark; for audio, a “prominently prefixed audio disclosure” (e.g., a voiceover at the start saying the audio is AI-generated).

Failing to do this will lead to an immediate loss of “Safe Harbour”, so your company is legally a “publisher” and can be sued for every second of that content’s existence.

2. Metadata & Provenance Embedding

Significant Social Media Intermediaries (SSMIs) and AI Tool Providers must ensure that SGI be embedded with permanent metadata and a unique identifier. This digital fingerprint must link the content back to the computer resource (the tool or platform) used to create it.

This is for traceability. If an AI ad is used for fraud or defamation, law enforcement must be able to trace the “digital trail” to the originating tool.

3. 3-Hour Response “War Room”

For Significant Social Media Intermediaries (SSMIs), the law reduces the window to act on a government or court order to just 3 hours (down from 36). For Non-Consensual Intimate Imagery (NCII), meaning sexual imagery, the window is even tighter: 2 hours.

This is to prevent offensive content from going viral. The government’s stance is that AI content spreads too fast for a 36-hour window to be effective in preventing riots, election interference, or personal trauma.

Failure to comply can lead to imprisonment for up to 7 years for the designated officers.

4. AI Vendor Auditing

Significant Social Media Intermediaries (SSMIs) and AI Service Providers must “deploy appropriate technical measures” to ensure users don’t create or share prohibited SGI (like CSAM, depictions of explosives, or deceptive impersonations).

This means you cannot “outsource” your liability. If your vendor’s tool doesn’t have filters, you are responsible for the output you publish.

Your company can be charged as an abettor under the Bharatiya Nyaya Sanhita (BNS) if the AI tool is used to generate illegal material.

5. Quarterly Advisory Updates

Significant Social Media Intermediaries (SSMIs) must notify users every 3 months about terms of service and the specific legal consequences of misusing AI (e.g., jail time for deepfakes).

The law requires you to actively remind users that “the rules have changed.”

If you miss an update, your Safe Harbour is revoked automatically, as you’ve failed the “periodic due diligence” requirement.

6. Identity Disclosure Readiness

Intermediaries must provide information (including the identity of the creator) to a victim of SGI-related harm within a set timeframe.

This is to enable Legal Recourse. Victims of deepfakes (e.g., morphed videos) have a right to know who created the content to file a case under the BNS.

Penalty: Refusal to disclose identity when legally mandated can lead to a blocking of your entire platform and massive fines under the DPDP Act.

What Brands Must Do

While brands are the primary deployers of AI. Under the IndiaAI Governance Guidelines and the IT Rules 2026, a brand’s creative freedom is now tethered to three pillars: Transparency, Consent, and Auditability.

1. Know the “Routine Editing” Exemptions

The law is strict, but it isn’t unreasonable. You do not need to label content if your AI use falls under “good-faith” technical corrections.

- No Label Needed: Routine tasks like color grading, noise reduction in audio, stabilizing shaky video, or using AI for basic formatting and compression.

- Label Mandatory: Any “material alteration” where AI creates a person, place, or event that looks real but didn’t happen (SGI). If the AI makes the content “indistinguishable” from reality, the label is non-negotiable.

2. Secure “Digital Personality” Rights

When using a person’s face or voice to create synthetic content, your legal team must update its talent contracts.

- Explicit AI Clause: Standard “usage rights” for video no longer cover synthetic recreation. You must obtain specific written consent for voice cloning, face-swapping, or digital twin creation.

- Non-Transferability: Ensure contracts prevent AI vendors from using your brand’s celebrity likeness to train their own “base models” without additional permission.

3. Implement the “Prominent & Visible” Standard

The rules moved away from a rigid “10% of screen” watermark to a more flexible, but high-stakes, “prominent and visible” standard.

- Visual Ads: A watermark (e.g., “AI-Generated” or “Synthetically Generated”) must be perceivable throughout the video, not just tucked into the end credits.

- Audio Ads: Radio spots or Spotify ads must include a short audio disclaimer at the very beginning.

- The Risk: If your ad is “indistinguishable” from reality and lacks this label, social platforms are legally obligated to flag, shadow-ban, or remove it to protect their own “Safe Harbour.”

4. Establish a “Provenance Trail”

In the event of a deepfake scam or a “brand hijack” using your assets, you must be able to prove what is yours.

- Metadata “Birth Certificates”: Ensure your agencies use tools that support C2PA standards. This embeds a digital signature into your files that survives even when the file is re-uploaded.

- Audit Logs: Keep the original, non-AI “human” footage and the AI-generation logs for at least 180 days. This is your primary defense if you receive a 3-hour takedown notice for “unauthorized impersonation.”

5. Vet Your “AI Supply Chain”

Liability under the Bharatiya Nyaya Sanhita (BNS) can extend to those who “abet” or enable a crime.

- Vendor Safety Checks: Ask AI vendors for their “Red Teaming” or safety reports. If your AI-generated chatbot or ad unintentionally uses a slur or depicts prohibited items (like explosives), the brand can be held liable for failing to exercise due diligence.

- Data Residency: Under the DPDP Act, ensure any personal data (like customer faces used in personalized ads) stays within India or follows approved cross-border transfer protocols.

The rollout of the AI guidelines marks a shift from “anything goes” to a structured era of accountability. While the government is encouraging innovation over restraint, they’ve made it clear that the responsibility for transparency lies with those who deploy the technology. By embedding consent and traceability into your creative process now, you ensure compliance and also that if a deepfake or a “brand hijack” happens, you have the legal and technical tools to protect your reputation and keep your audience’s trust.